Designing chatbots

DIGImo: a Chatbot with Digital Emotions

Since 2016, chatbots became a growing trend for many business sectors. Their 24/7 availability and their efficiency at handling repetitive tasks made them a valuable asset. Even though latest advance AI conversational tools improved chatbots in terms functionality and accuracy, there are still challenges to overcome until they can seamlessly carry out conversations with people.

One of these challenges I am addressing in this project relates to emotional intelligence. Emotional intelligence goes beyond informational or transaction tasks and would enable chatbots to be successfully deployed as customer service assistants. Such chatbots would “understand” users’ feelings and respond accordingly.

Challenges

Data set: getting a dataset with real emotion expressed by people while interacting through a chat platform is hard. Research in this area usually deploys open data collections based on movie subtitles or Twitter corpora. While a vast amount of data from these sources can generate solid models, the content might not be optimal for the development of an emotional intelligent chatbot for several reason:

- firstly, movie subtitles are transcribed spoken interaction with enacted, i.e. not real emotions.

- secondly, transcribed spoken interactions are significantly different from chat interactions

- thirdly, twitter data are public messages to which people react with comments: even though in real time, the interaction is rather asynchronous

Local context: whereas Europeans and Americans tend to feel and fully express emotions, Asians are likely to attenuate their emotions as well as inhibiting their overt manifestations. This increases the likelihood that our collected data in Singapore will have a sparse amount of expressed emotions.

Data type: humans can express emotions over single or mixed channels. Emotions expressed through mixed channels, (e.g. vocal cues & words or vocal cues & facial expressions, etc.) are easier to interpret as they are less ambiguous. Single channel emotion on the other side, such as words in written communication can be more challenging to “decode”.

Data annotation: emotion annotation is a notoriously difficult task. Current annotation schemes are based on psychological theories of human interaction but they aren’t optimal for annotating emotions in text messages.

Solutions

For the data set, we opted to collect an own corpus. This approach enabled us to have real (as opposed to enacted) chat interactions.

To minimize the effects of the local context and data type on our data collection, conversations were lead by experts. The experts were trained to enable people to talk freely and express emotions. 3 topics with potential emotional load were selected in advance: customer experiences, heath and personal events with psychological impact.

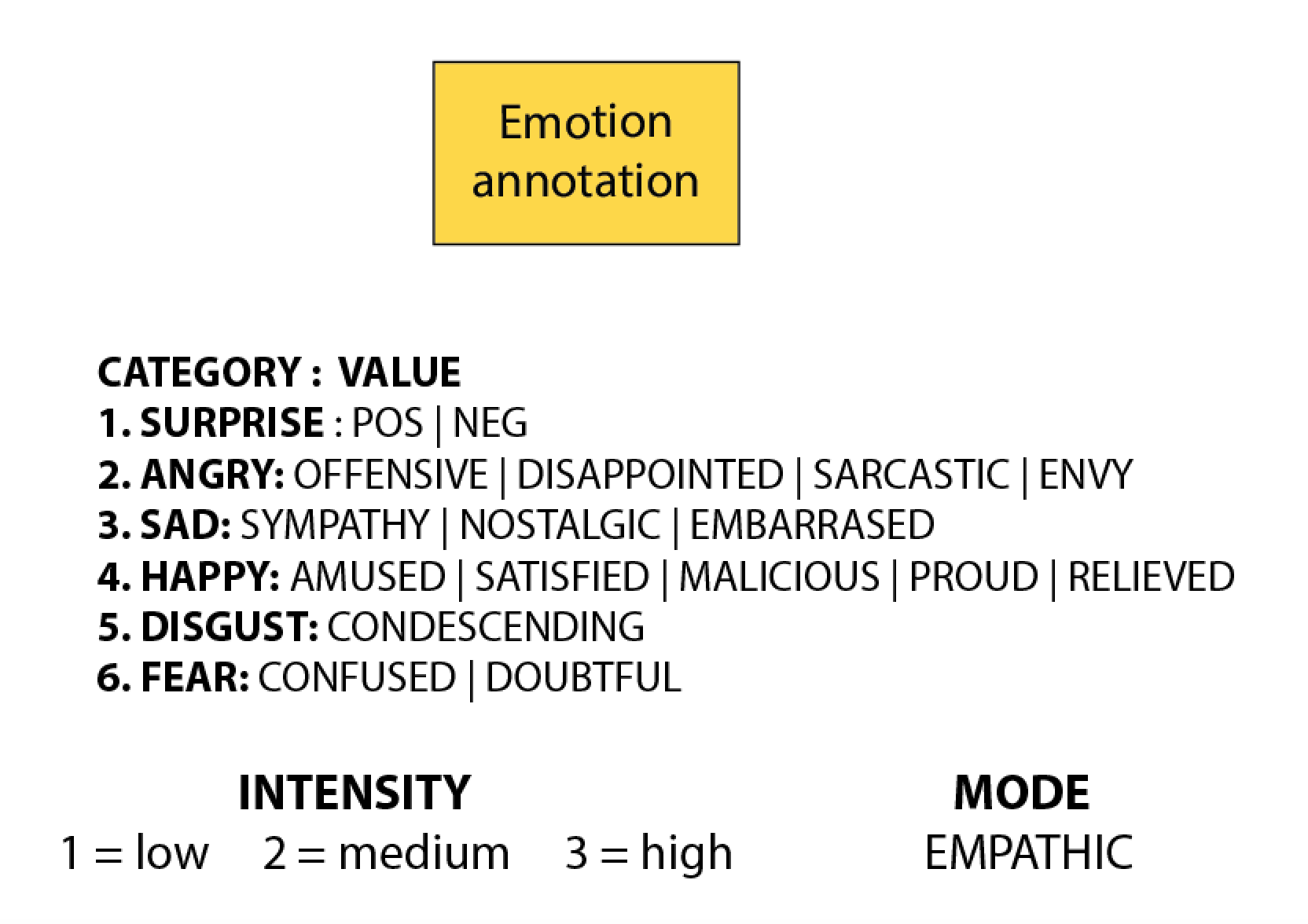

For the data annotation, we elaborated an annotation scheme based on Ekman’s six emotions model such as anger, disgust, fear, happiness, sadness, and surprise. We further enhanced the scheme with additional values that basic emotions could embrace (see Figure 1).

These additional values were meant to help annotators identify the correct emotion expressed in the dialogues. Three intensity levels for emotion expression were defined such as low (1), middle (2) and high (3). We also defined the expression mode, i.e. whether the emotion was expressed empathetically.

The scheme was developed in an iterative process based on the first dialogue samples from the newly collected data. An elaborated guideline with numerous annotation examples was handed to the annotators at the end of the data collection. Below, an example extracted from our guideline. It shows the annotation of ‘surprise’:

<SURPRISE>=”1” VALUE=NEG: “Oh, I didn’t know that …”;

The surprise mark is given by the word “oh”.The intensity value is 1 since there are no other markers of emphasis (no adjectives, no uppercase letters, no exclamation mark.

<SURPRISE>=”2” VALUE=POS MODE= EMPHATIC: “Wow great news”

Here the intensity=2 because of the interjection “wow” and the adjective “great” however, it is expressed empathically, so the intensity is below 3

<SURPRISE>=”3” VALUE=POS> “OMG, really??; <SURPRISE>=”3” VALUE= NEG> “He died?? OMG what a shock!”. Upper case letters, double question or exclamation mark a higher level of emotion – this this case:”3”

Data Collection &

ANNOTATION

A total of 60 participants interacted over a chat platform with our experts enabling to collect 7027 dialogue turns.

The sub-topics covered in the dialogues included: experiences in retail and restaurant services, issues concerning home renovation, work, unemployment, personal relationships and family matters (e.g. death of a relative, getting married and going through break-ups), education (e.g. studies, course training, school), hobbies, army, health issues (e.g. lack of sleep, depression, illness, weight management, nutrition, etc.), holiday and experience abroad.

The data was collected and annotated over a period of three months by two annotators. The inter-agreement reliability calculations were performed using Krippendorf alpha measurements on 10% of the entire data. The results indicate a high inter-agreement rate of 0.817%.

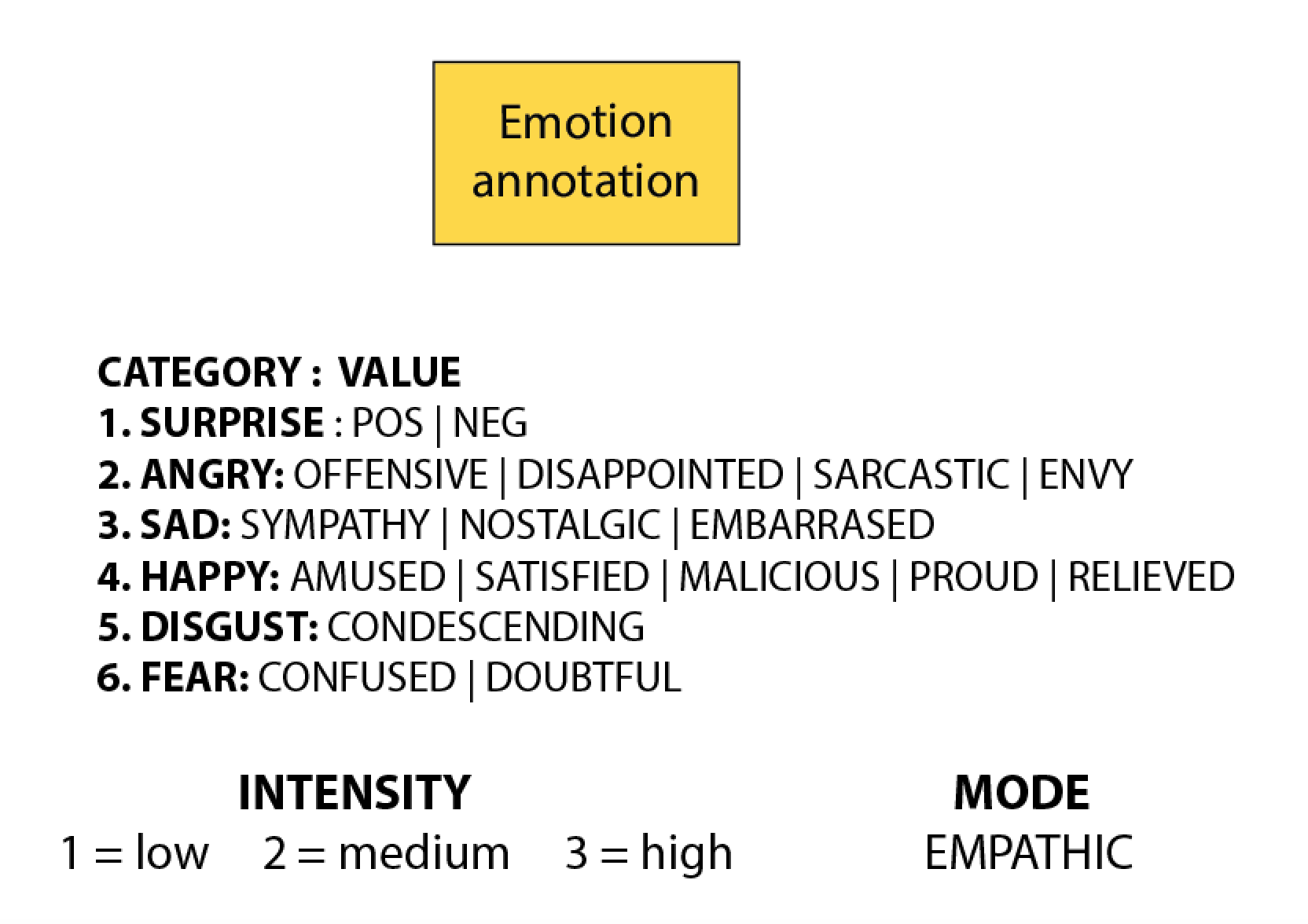

The data collection enabled us to tag about 801 emotions with most frequent emotions being angry (29%) and happy (28%) as shown in Figure 2.

Prototype

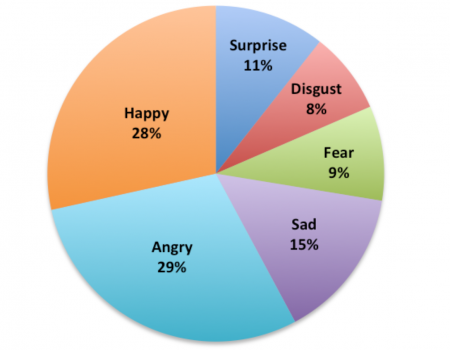

For our first DigiMo prototype, we we used Cakechat, an open source project for emotional generative dialogue systems. Cakechat implements a sequence-to- sequence dialogue model (HRED) obtained from pre- trained models. The models were trained on carefully pre- processed 11 GB Twitter data.

We fed our own data collection to Cakechat, using a dictionary of 50k words. Statements with no emotional expression were labeled as ‘neutral’. The learning rate was set at 0.01. For the encoded/decoded sequence maximum of 30 tokens are allowed. As such, we trimmed and readjusted the turns to fit this condition. Both encoder and decoder contain 2 GRU layers with 512 hidden units each.

The architecture of DigiMo is depicted in Figure 3.

testing

Our first prototype lacked an emotion classifier for the user input. Yet, we were able to test the chatbot answers by manually adding the emotion classification of each user input and testing the system over the terminal: <user_input>: “I am really scared of this exam” [FEAR].

- Automatic evaluation

We tested our model experimenting with different epochs and batch sizes. During training, we controlled context- sensitive and context-free perplexity values. The best results – context free & context sensitive perplexity, both with a low value of 29 – were achieved with a system configuration using a batch size of 128, 15 epochs, free- context perplexity and context size equal to 3.

- Expert evaluation

We further tested DigiMo output with six human expert evaluators – three linguists and three chatbot developers. For this purpose, we chose a subset of emotions ‘happy’ (positive), ‘angry’ (negative) and ‘no emotion’ (neutral statements); we also chose ‘disgust’ (negative) as an emotion most difficult to detect and correctly annotate in our corpus.

We manually generated 12 sentence each of the four emotions (happy, angry and disgust and no emotion) programmed DigiMo to respond automatically to each of these sentences – see Table 2. We obtained a total of 192 pair sentences. Experts were showed these pairs and were asked to evaluate DigiMo’s replies as (answer could work in a specific context

DigiMo achieved a total accuracy across our sample data of 78.20% calculated as the number of Nsuitable + Nneutral) / Ntotal *100. Highest average values were achieved for chatbot answers expressing happiness 85.75%, the highest values being achieved for combinations matching happy emotions for both user and DigiMo (95.83%).

An interesting case is the combination of user:angry and chatbot:happy (88.88%). This combination, despite being a mismatch achieved many accurate responses. A closer look revealed the fact that this combination creates sarcastic but at the same time suitable responses, e.g. (User: “my phone is out of battery”, DigiMo: “awesome”). Lowest average values were achieved for chatbot answers loaded with angry emotions (67.01%), the lowest value being achieved by the combination: user:happy & chatbot:angry (52.77%).

future work

We believe that for a first prototype DigiMO shows very promising results. Its responses are emotionally appropriate to our input thanks to our data collection and careful annotation.

In the future, we plan to develop and test an emotion classifier and incorporate the models into a task- oriented chatbot deployed in customer service. This is by no means an easy task as unlike sentiment analysis, emotion detection focuses on detecting several emotion categories going beyond the binary negative/positive classification. This classification complexity makes emotion detection a more challenging task. Secondly, relying on text only, i.e. in the absence of speech, which is the typical case of text-based chatbot, makes the emotion detection much harder. Intensity varies according to user personality, chatting habits and culture. In Singapore, we would expect people to express emotions rather moderately, which is the typical way for Asian culture known for the tendency towards introvert pattern of expression.

More detailed information about this project can be found in the paper:

“DigiMo – towards developing an emotional intelligent chatbot in Singapore”